Video-Specific Autoencoders for

Exploring, Editing and Transmitting Videos.

|

|

|

|

|

|

|

|

|

We study video-specific autoencoders that allow a human user to explore, edit, and efficiently transmit videos. Prior work has independently looked at these problems (and sub-problems) and proposed different formulations. In this work, we train a simple autoencoder (from scratch) on multiple frames of a specific video. We observe: (1) latent codes learned by a video-specific autoencoder capture spatial and temporal properties of that video; and (2) autoencoders can project out-of-sample inputs onto the video-specific manifold. These two properties allow us to explore, edit, and efficiently transmit a video using one learned representation. For e.g., linear operations on latent codes allow users to visualize the contents of a video. Associating latent codes of a video and manifold projection enables users to make desired edits. Interpolating latent codes and manifold projection allows the transmission of sparse low-res frames over a network. |

|

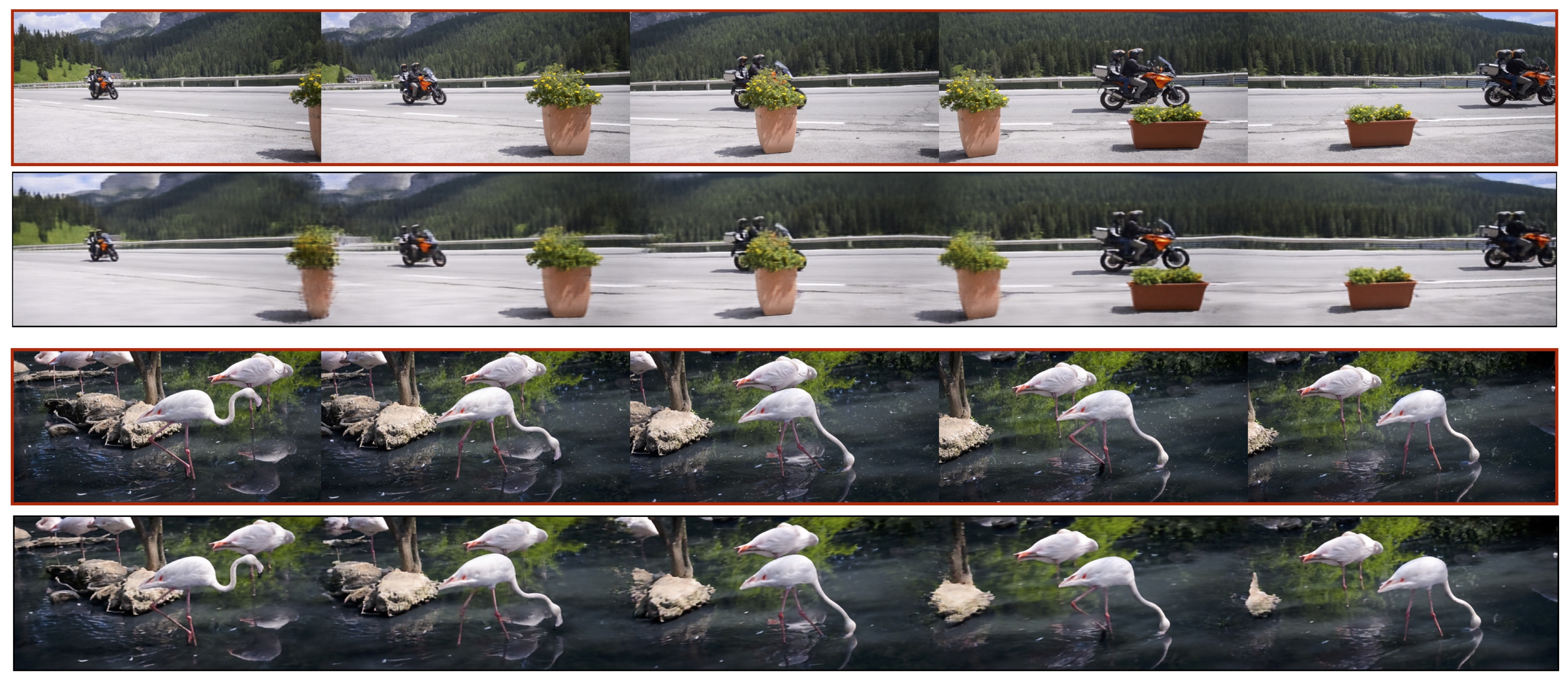

K. Wang, D. Ramanan, and A. Bansal Video-Specific Autoencoders for Exploring, Editing and Transmitting Videos. |

| We naively concatenate the spread-out frames in a video and feed it through the video-specific autoencoder. The learned model generates a seamless output. |

|

10x Spatial Super-Resolution via reprojection property |

|

Acknowledgements |