http://cs.cmu.edu/~aayushb/hallucinate/

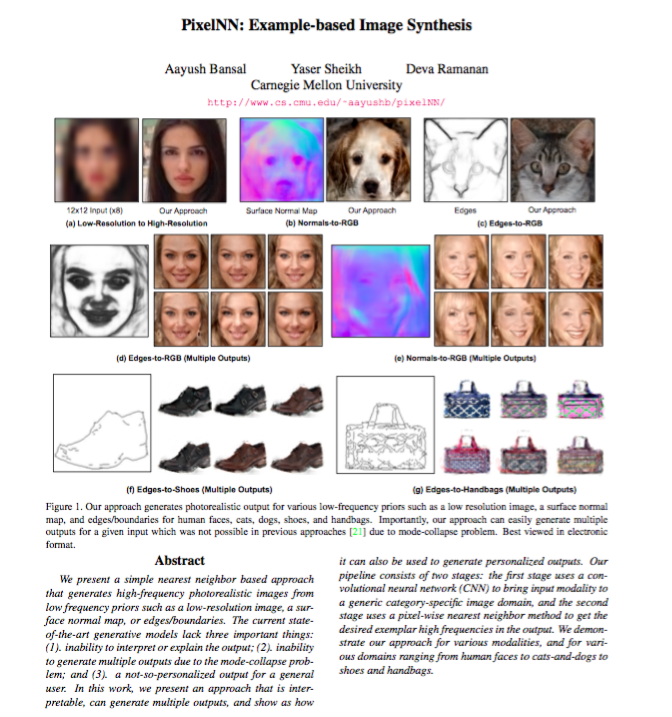

PixelNN: Example-based Image Synthesis

Aayush Bansal, Yaser Sheikh, Deva Ramanan

Abstract

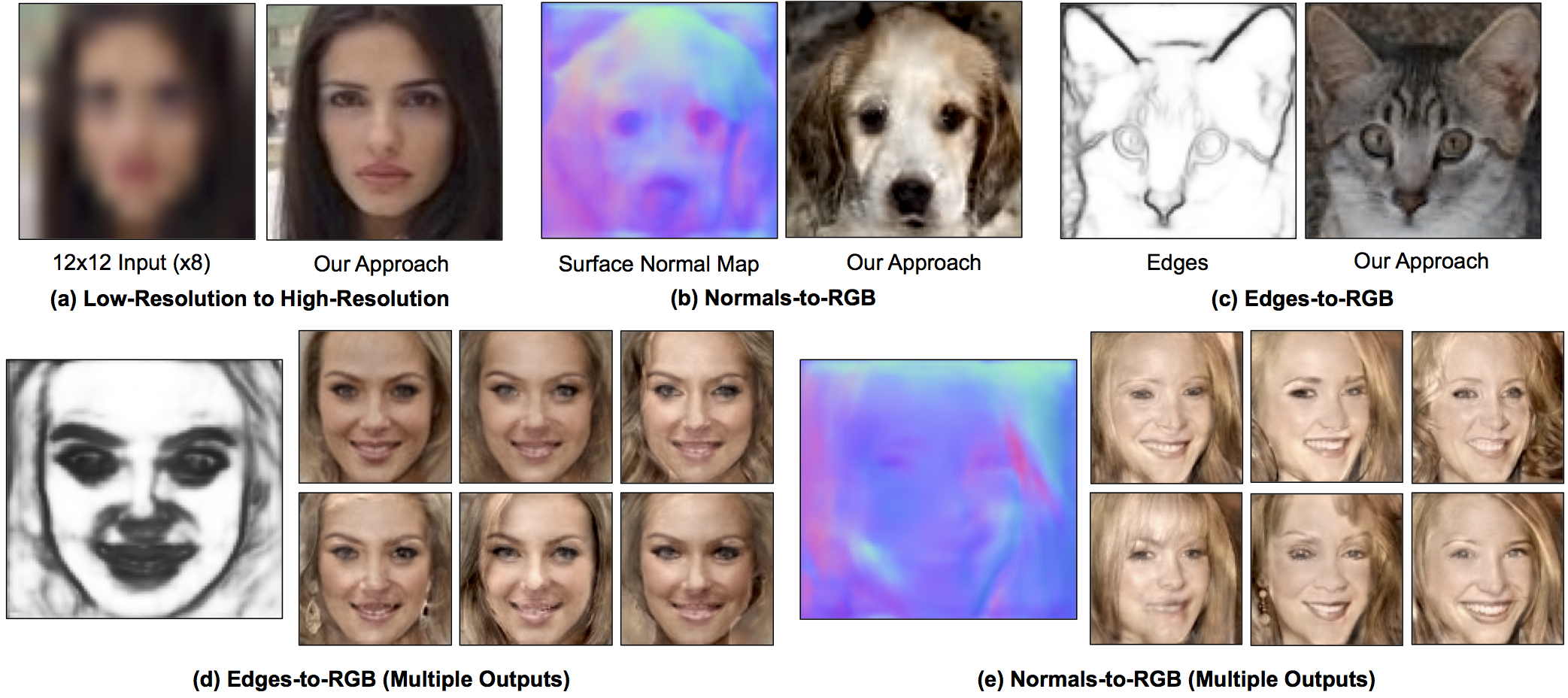

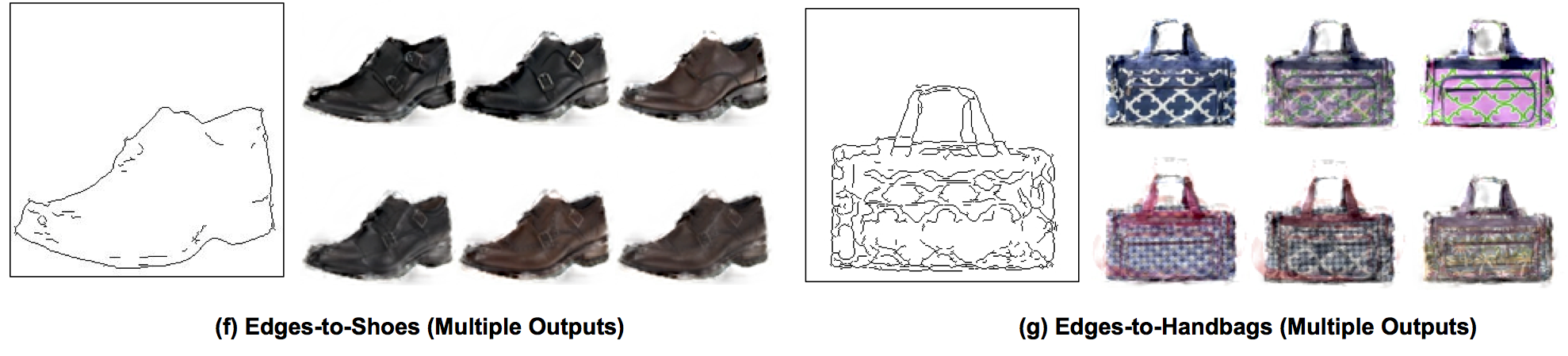

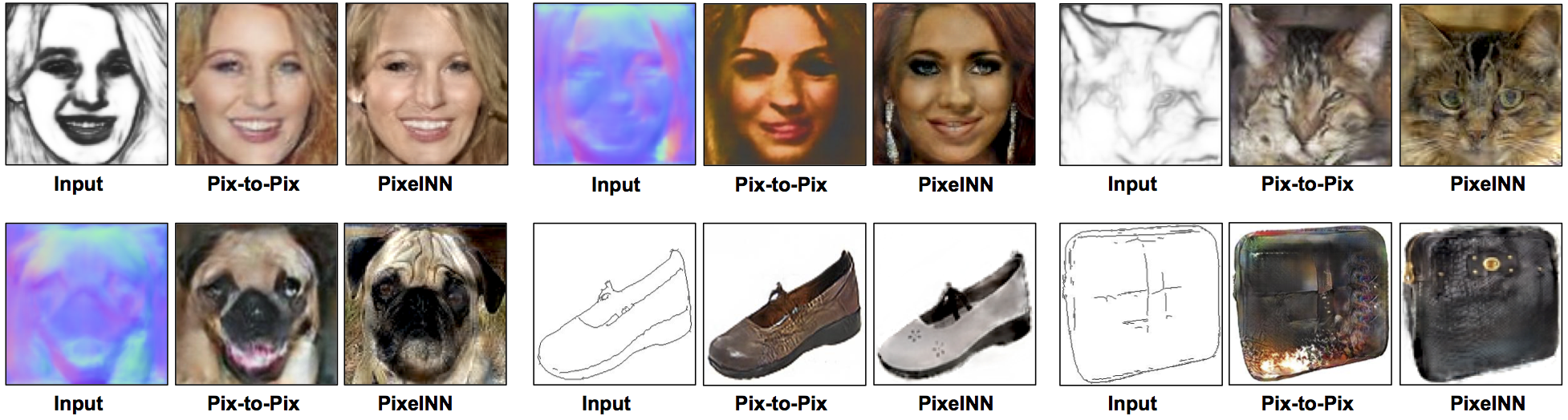

We present a simple nearest-neighbor (NN) approach that synthesizes high-frequency photorealistic images from an "incomplete" signal such as a low-resolution image, a surface normal map, or edges. Current state-of-the-art deep generative models designed for such conditional image synthesis lack two important things: (1) they are unable to generate a large set of diverse outputs, due to the mode collapse problem. (2) they are not interpretable, making it difficult to control the synthesized output. We demonstrate that NN approaches potentially address such limitations, but suffer in accuracy on small datasets. We design a simple pipeline that combines the best of both worlds: the first stage uses a convolutional neural network (CNN) to maps the input to a (overly-smoothed) image, and the second stage uses a pixel-wise nearest neighbor method to map the smoothed output to multiple high-quality, high-frequency outputs in a controllable manner. We demonstrate our approach for various input modalities, and for various domains ranging from human faces to cats-and-dogs to shoes and handbags.

Paper

|

PixelNN: Example-based Image Synthesis.

|

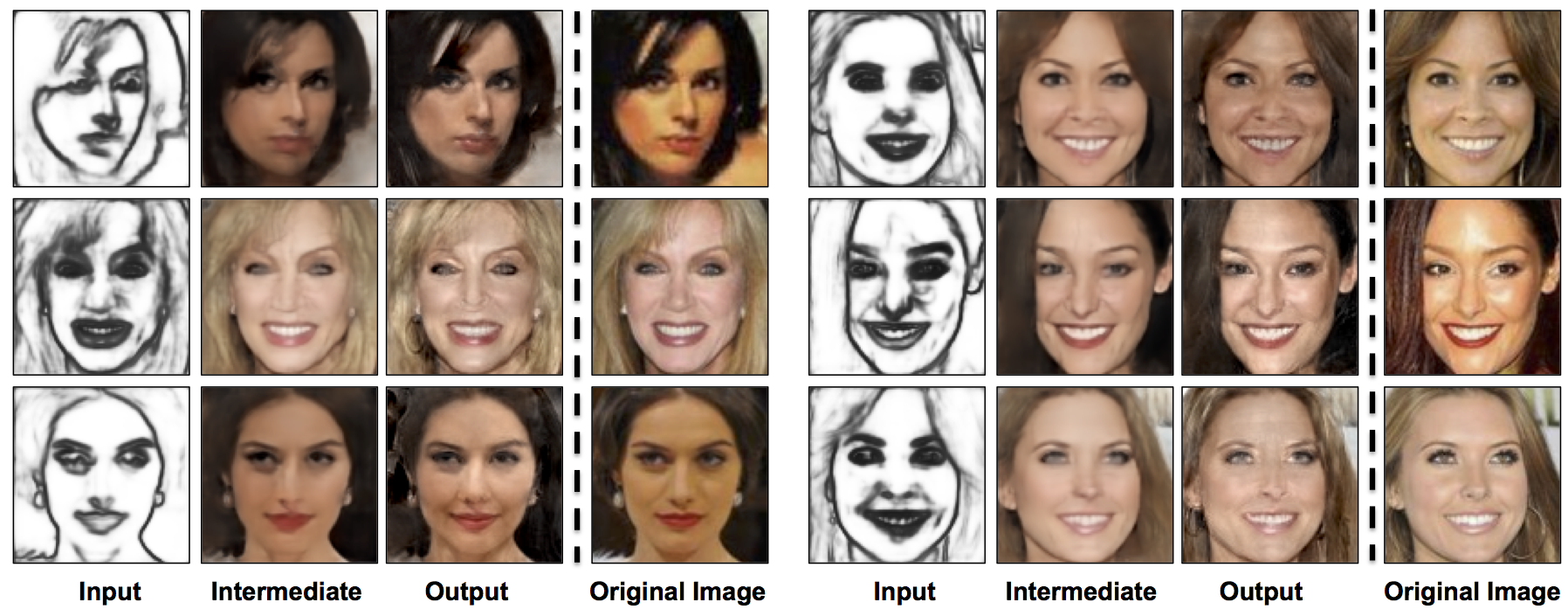

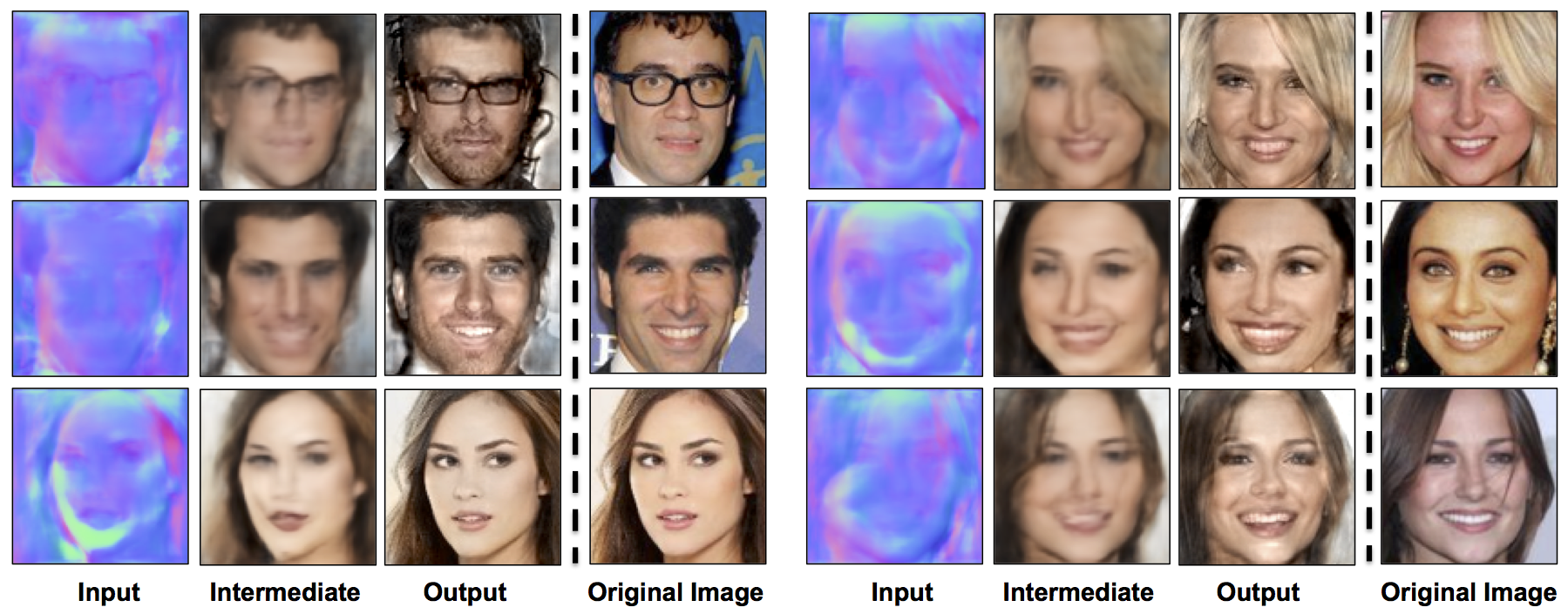

Comparison with Pix-to-Pix

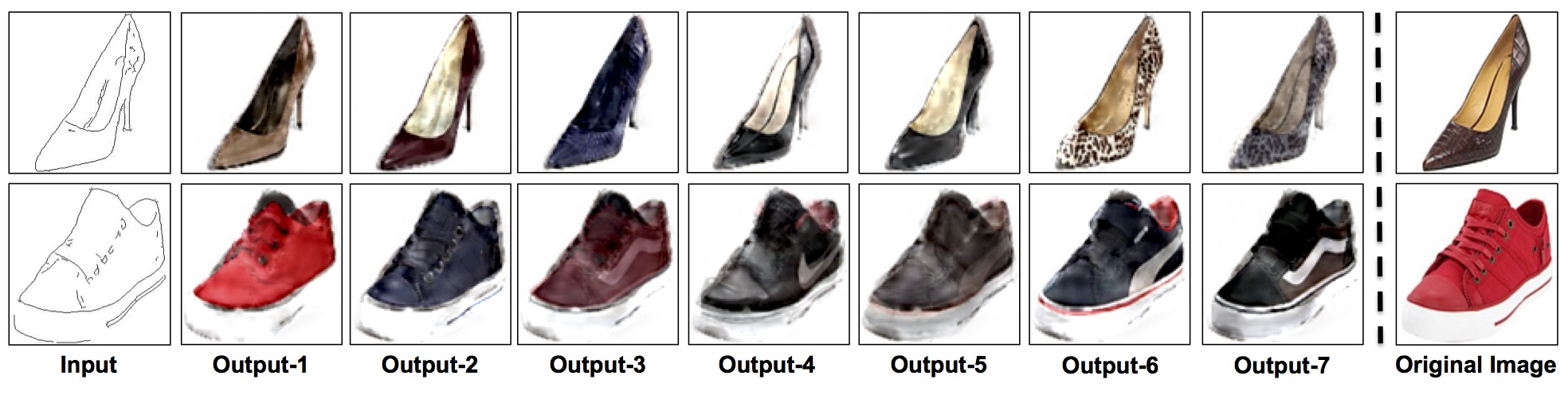

Multiple Outputs

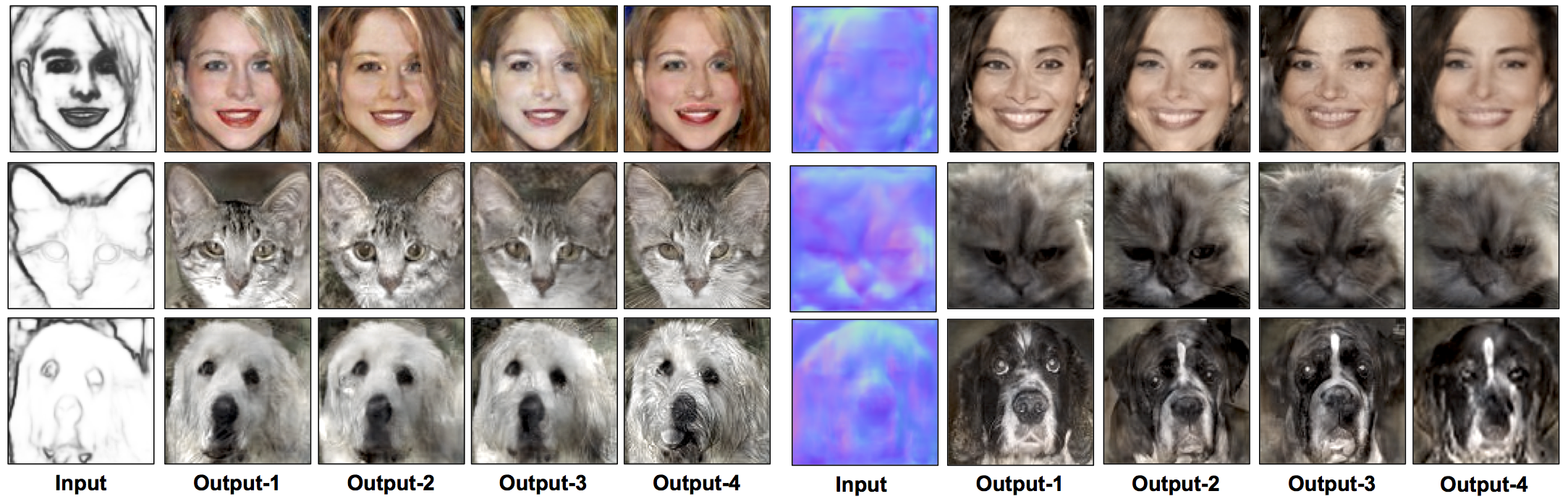

Edges-to-Faces

Normals-to-Faces

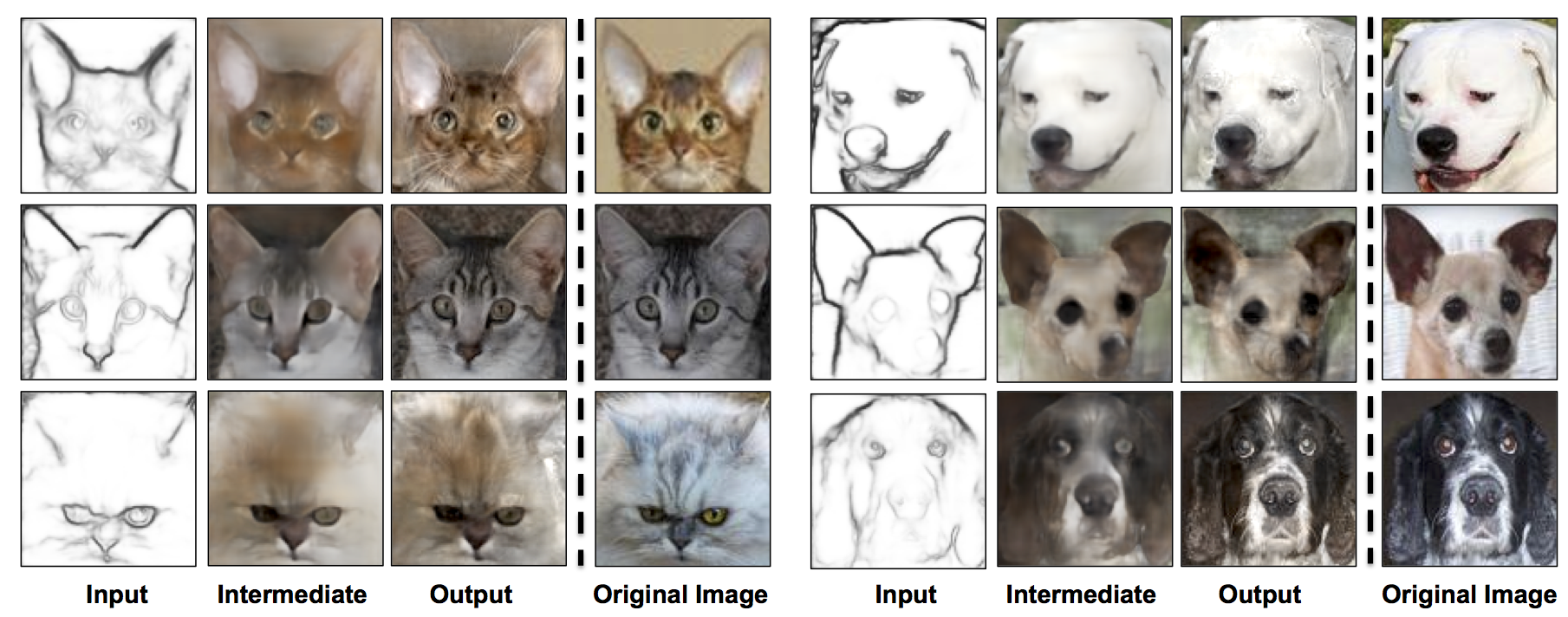

Edges-to-Cats-&-Dogs

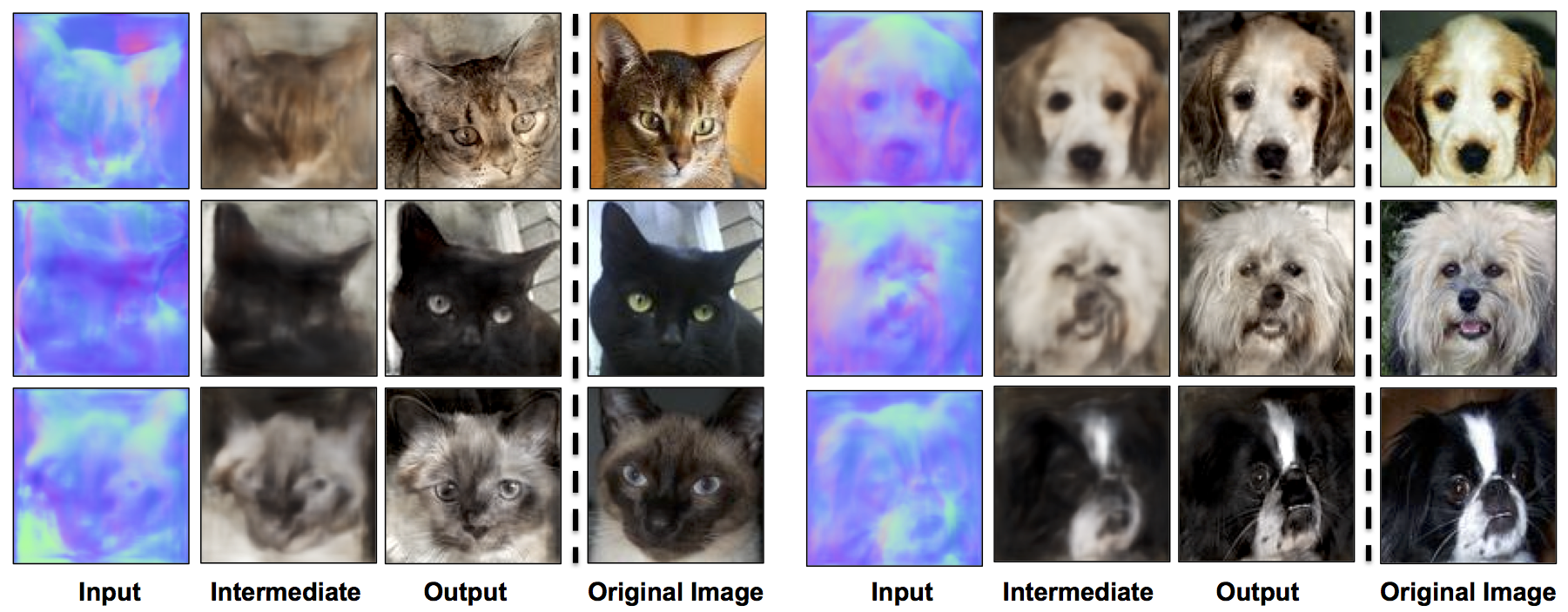

Normals-to-Cats-&-Dogs

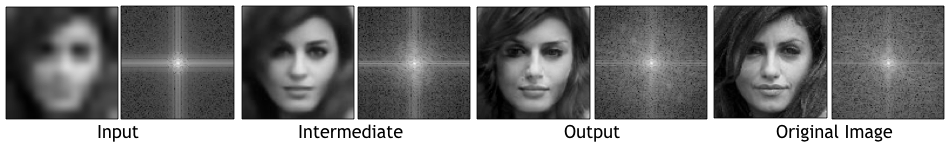

Example Frequency Analysis

We did frequency analysis via FFT to understand the frequency content in the output of our images.

Comments, questions to Aayush Bansal.