http://cs.cmu.edu/~aayushb/SST/

Streaming Self-Training via

Domain-Agnostic Unlabeled Images

Zhiqiu Lin, Deva Ramanan, Aayush Bansal

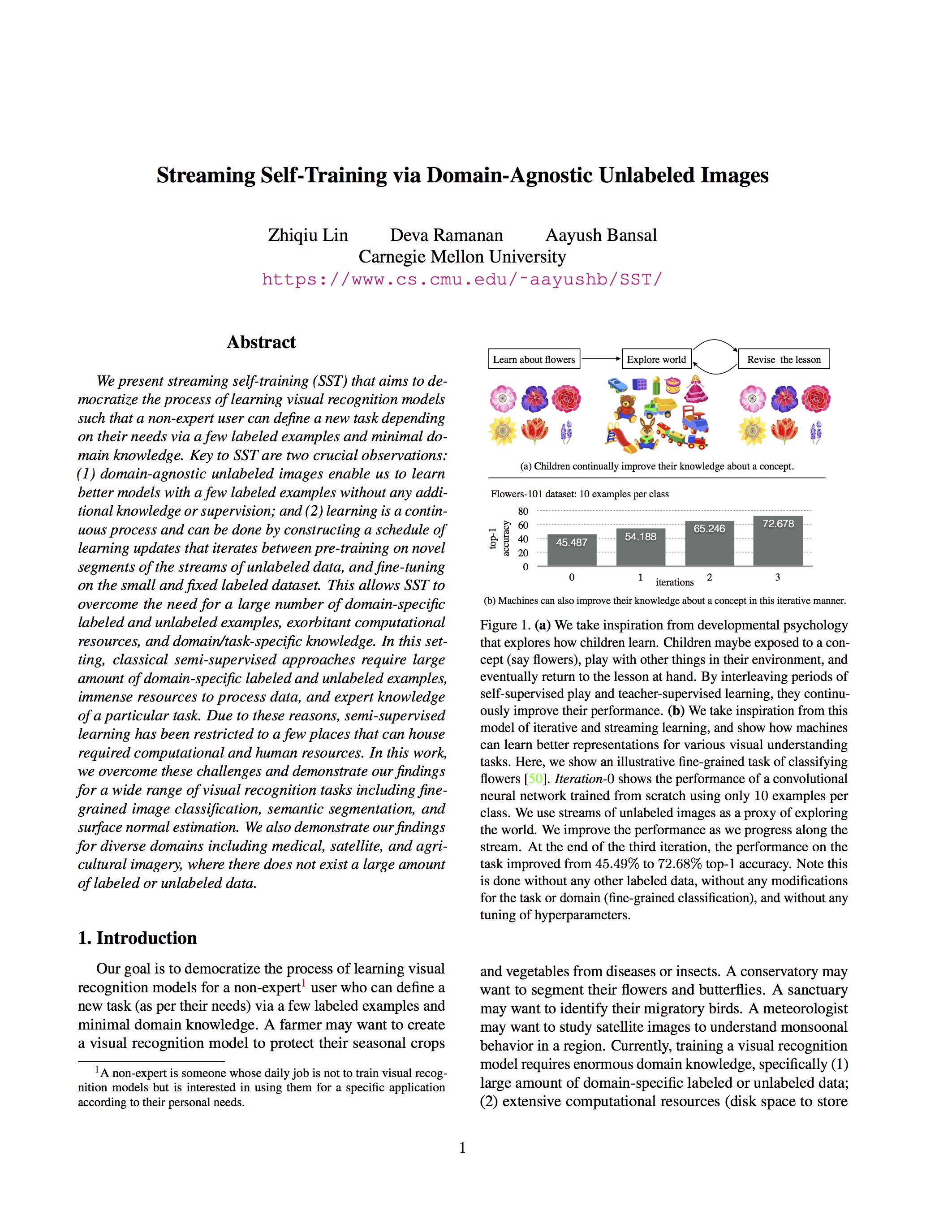

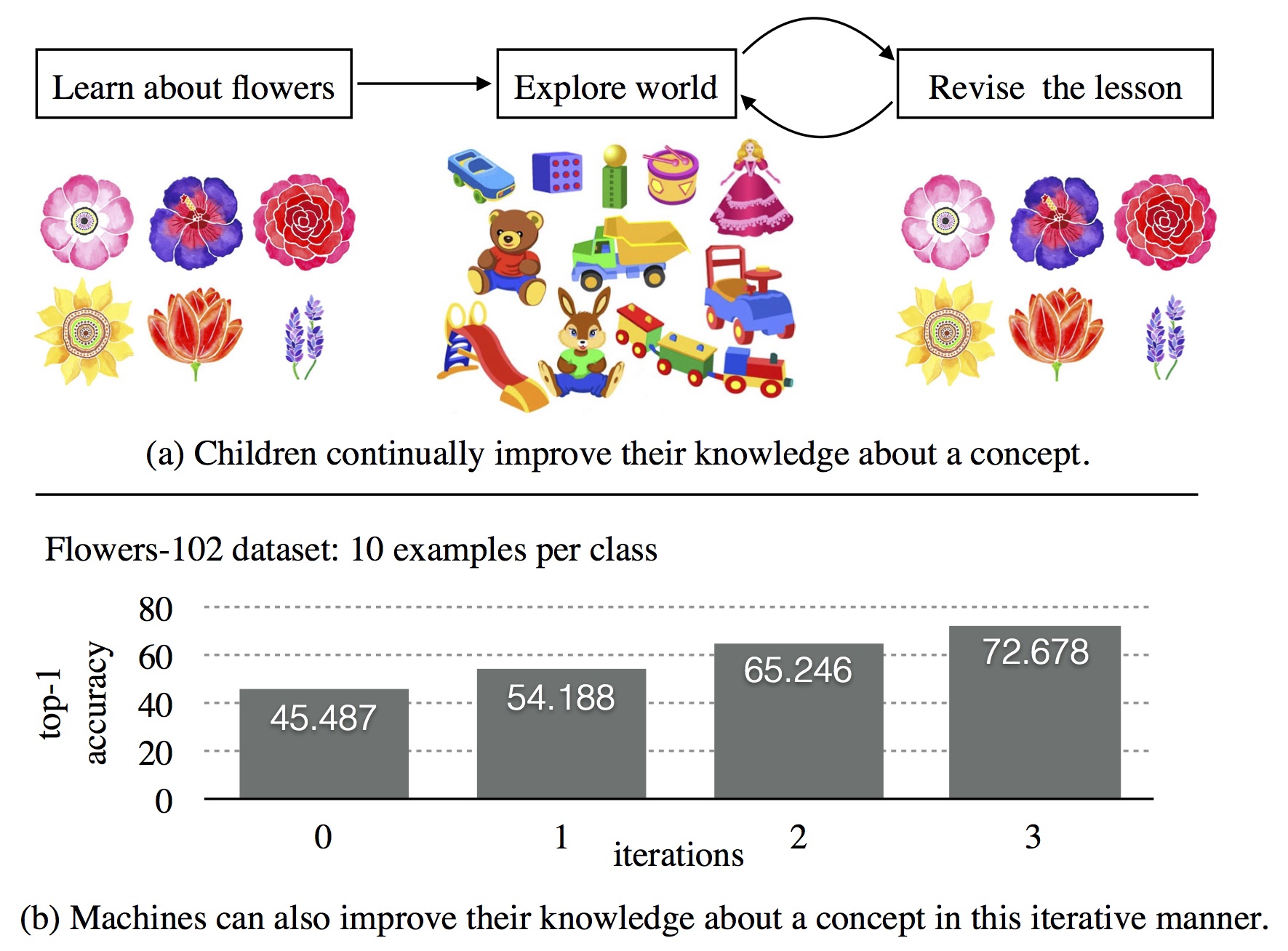

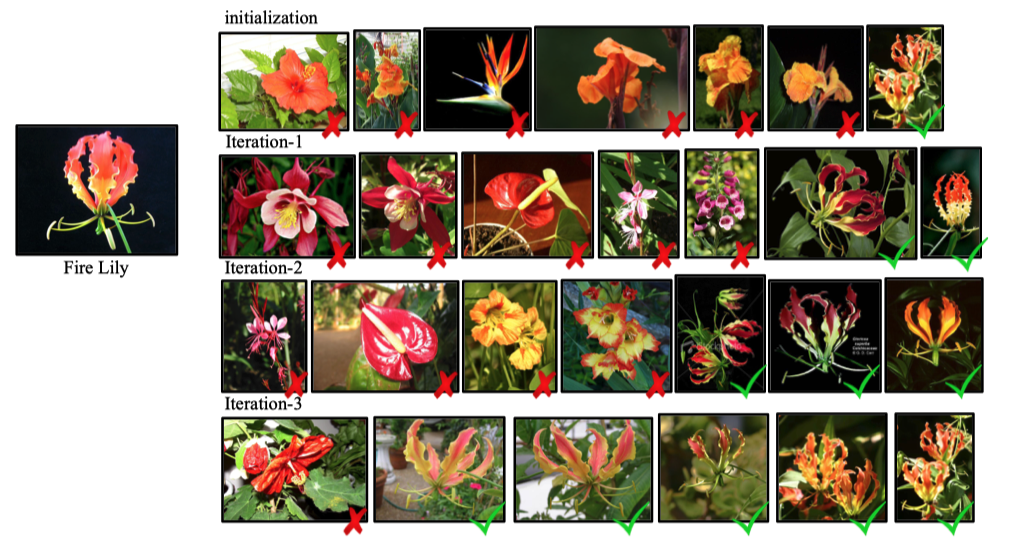

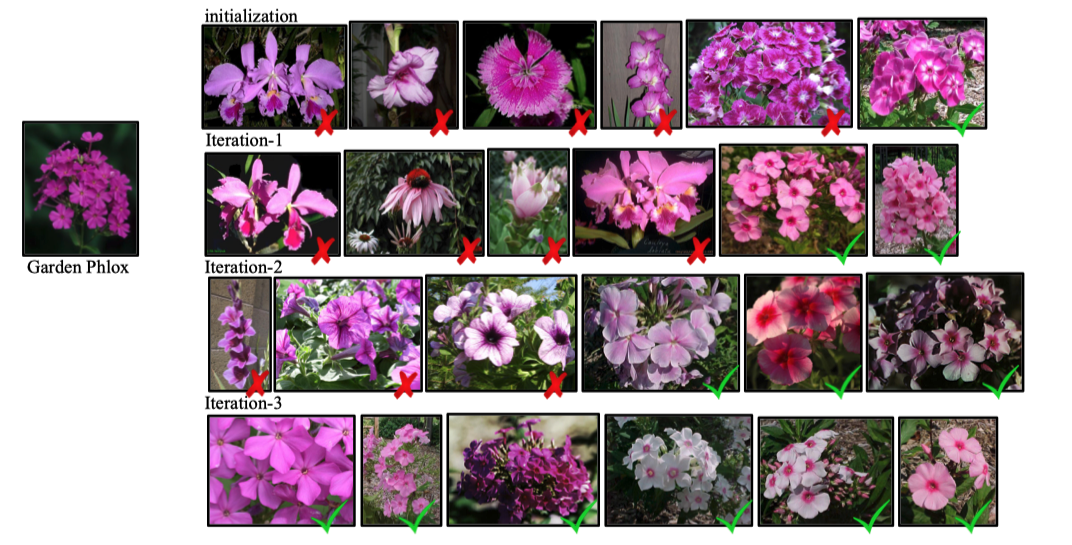

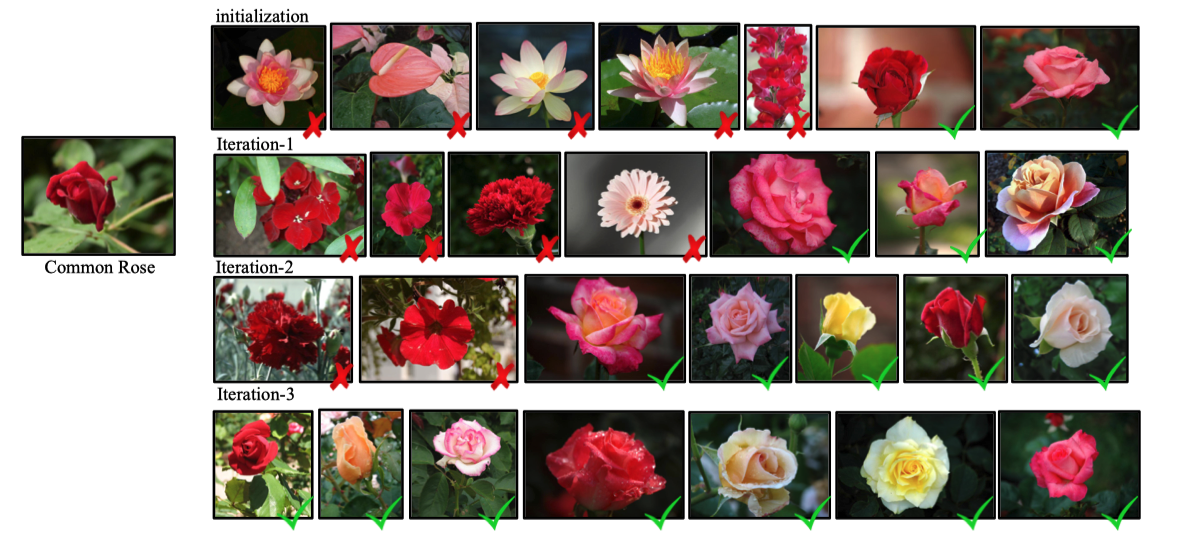

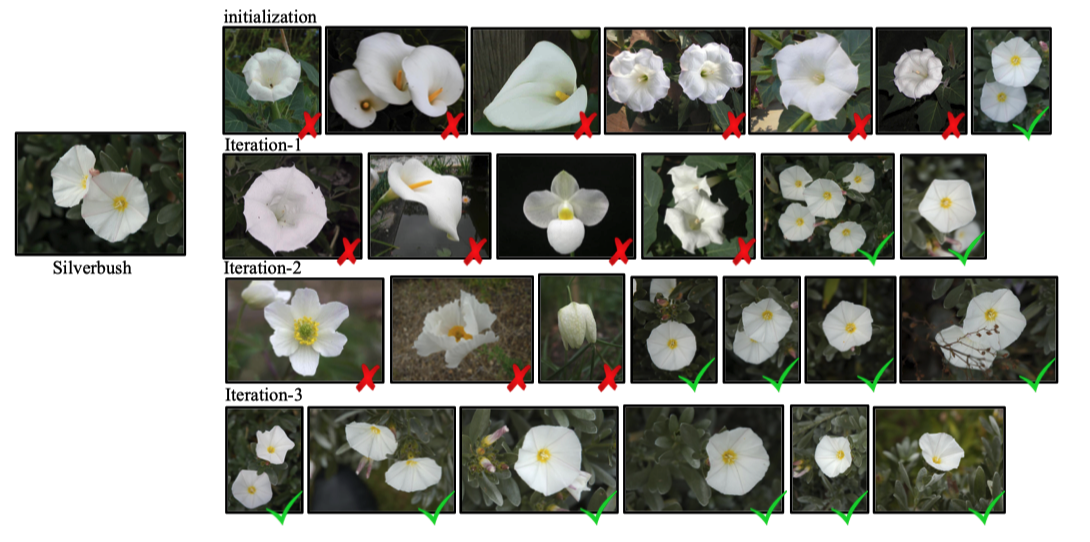

(a) We take inspiration from developmental psychology that explores how children learn. Children maybe exposed to a concept (say flowers), play with other things in their environment, and eventually return to the lesson at hand. By interleaving periods of self-supervised play and teacher-supervised learning, they continuously improve their performance. (b) We take inspiration from this model of iterative and streaming learning, and show how machines can learn better representations for various visual understanding tasks. Here, we show an illustrative fine-grained task of classifying flowers. Iteration-0 shows the performance of a convolutional neural network trained from scratch using only 10 examples per class. We use streams of unlabeled images as a proxy of exploring the world. We improve the performance as we progress along the stream. At the end of the third iteration, the performance on the task improved from 45.49% to 72.68% top-1 accuracy. Note this is done without any other labeled data, without any modifications for the task or domain (fine-grained classification), and without any tuning of hyperparameters, and we use a simple softmax loss.

Abstract

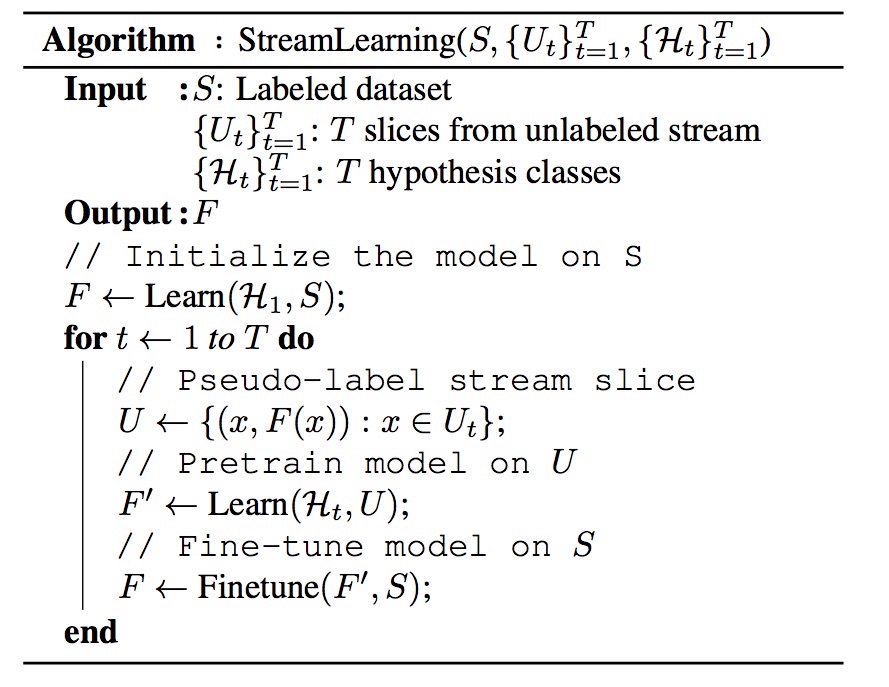

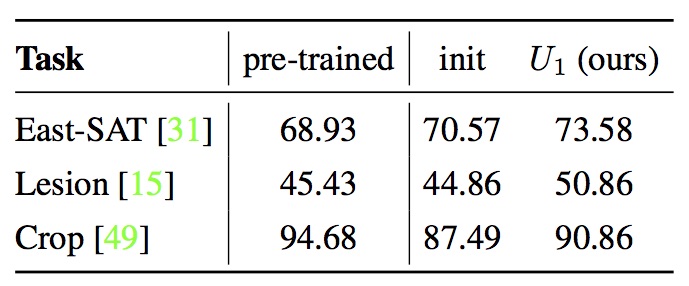

We present streaming self-training (SST) that aims to democratize the process of learning visual recognition models such that a non-expert user can define a new task depending on their needs via a few labeled examples and minimal domain knowledge. Key to SST are two crucial observations: (1) domain-agnostic unlabeled images enable us to learn better models with a few labeled examples without any additional knowledge or supervision; and (2) learning is a continuous process and can be done by constructing a schedule of learning updates that iterates between pre-training on novel segments of the streams of unlabeled data, and fine-tuning on the small and fixed labeled dataset. This allows SST to overcome the need for a large number of domain-specific labeled and unlabeled examples, exorbitant computational resources, and domain/task-specific knowledge. In this setting, classical semi-supervised approaches require large amount of domain-specific labeled and unlabeled examples, immense resources to process data, and expert knowledge of a particular task. Due to these reasons, semi-supervised learning has been restricted to a few places that can house required computational and human resources. In this work, we overcome these challenges and demonstrate our findings for a wide range of visual recognition tasks including fine-grained image classification, semantic segmentation, and surface normal estimation. We also demonstrate our findings for diverse domains including medical, satellite, and agricultural imagery, where there does not exist a large amount of labeled or unlabeled data.

Paper

|

Streaming Self-Training via Domain-Agnostic Unlabeled Images

|

Role of Domain-Agnostic Images

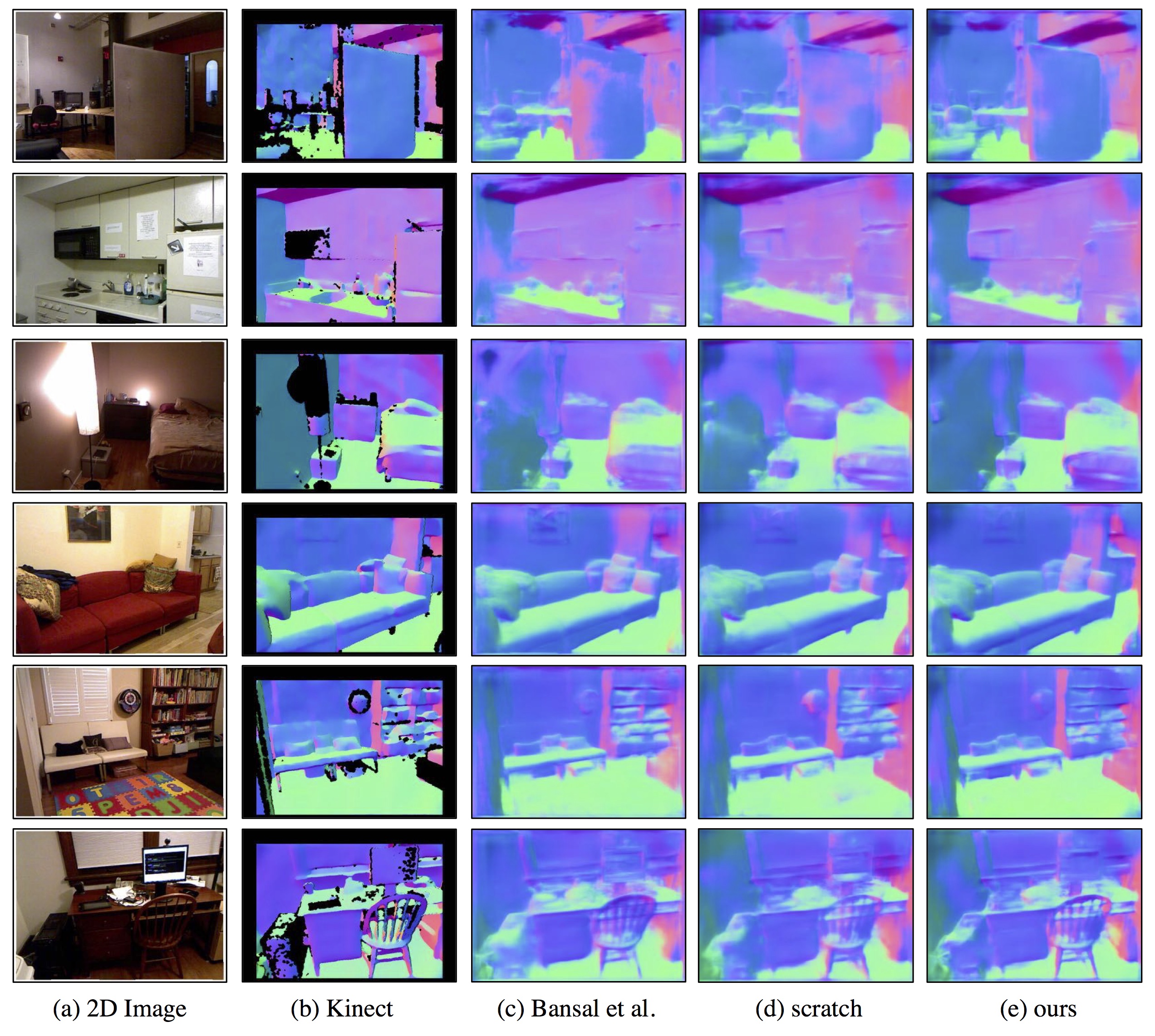

Surface Normal Estimation

Streaming Learning

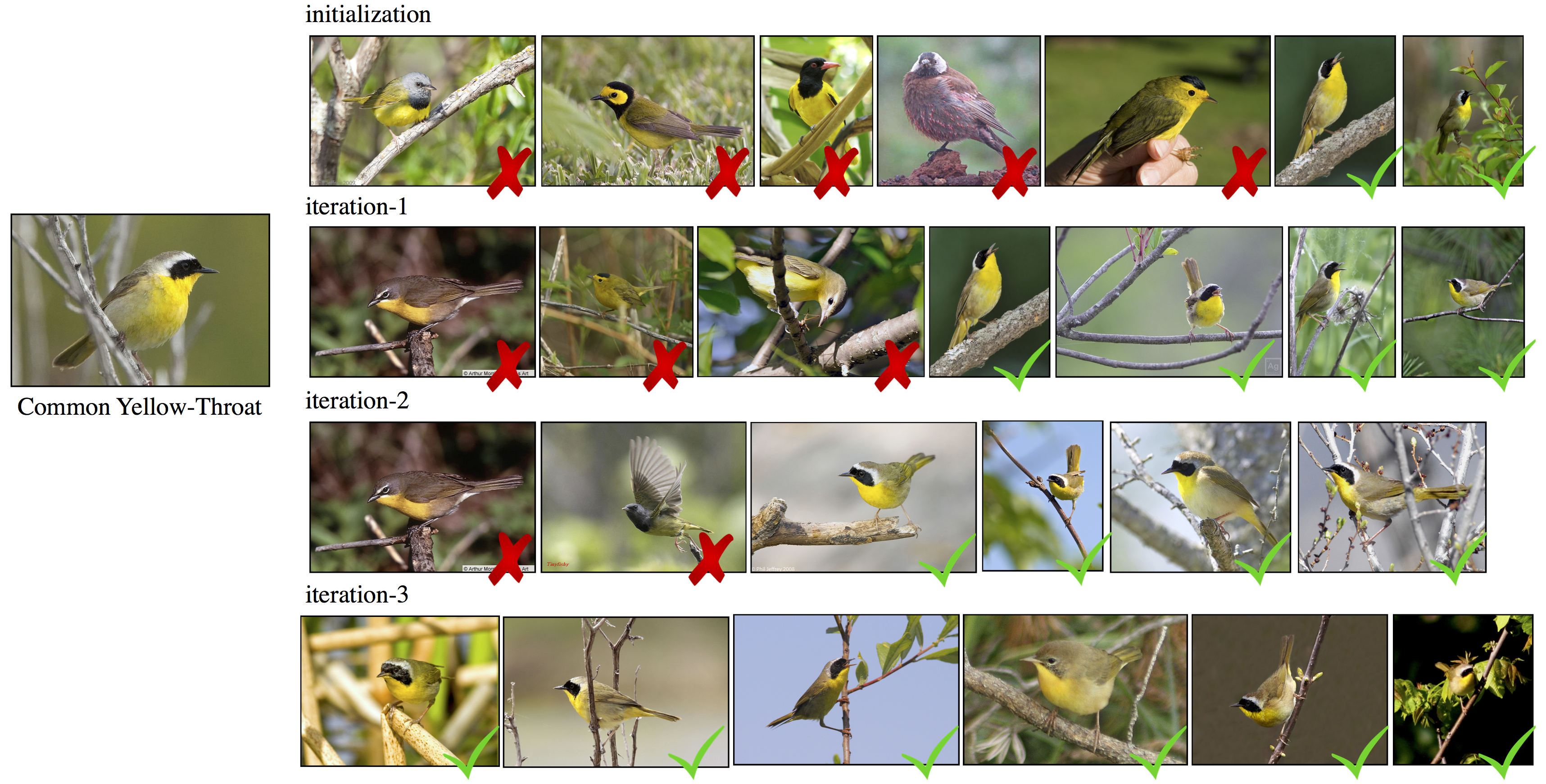

At initialization, the trained model confuses common yellow-throat with hooded oriole, hooded warbler, wilson rbler, yellow-breasted chat, and other similar looking birds. We get rid of false-positives with every iteration. At the the end of the third iteration, there are no more false-positives.

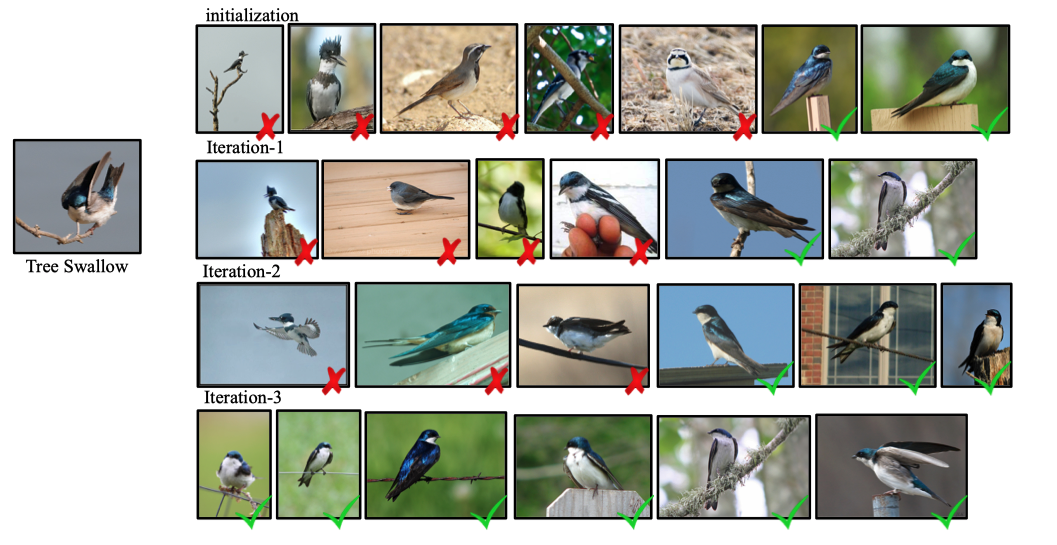

At initialization, the trained model confuses a tree swallow with dark eyed junco, belted kingfisher, black throated blue warbler, blue jay, etc. We get rid of false-positives with every iteration. With more iterations, the false positives become fewer.

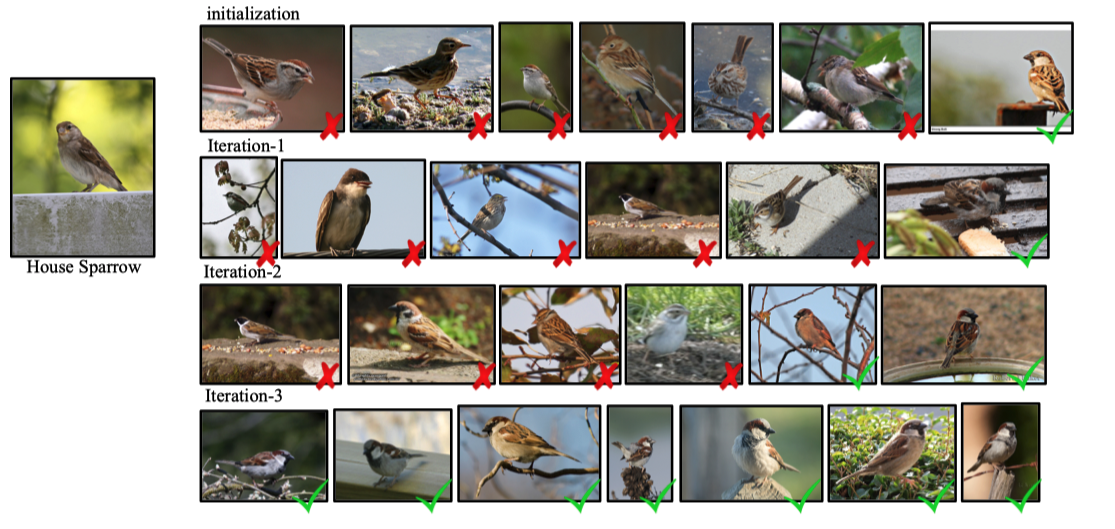

At initialization, the trained model confuses a house sparrow with savannah sparrow, field sparrow, clay-colored sparrow, tree sparrow, house wren, etc. We get rid of false-positives with every iteration. With more iterations, the false positives become fewer.

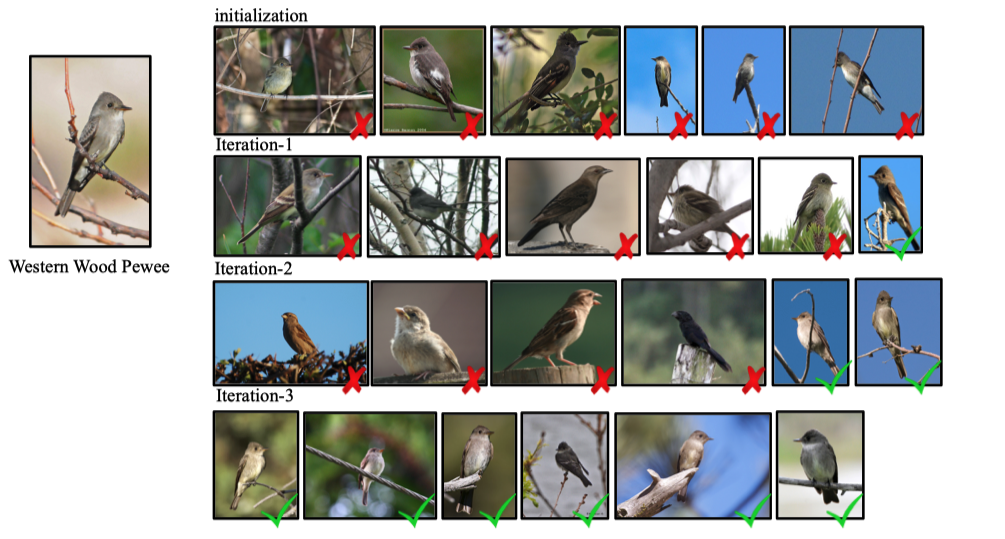

At initialization, the trained model confuses western wood pewee with warbling vireo, olive sided flycatcher, great crested flycatcher, least flycatcher, etc. We get rid of false-positives with every iteration. With more iterations, the false positives become fewer.

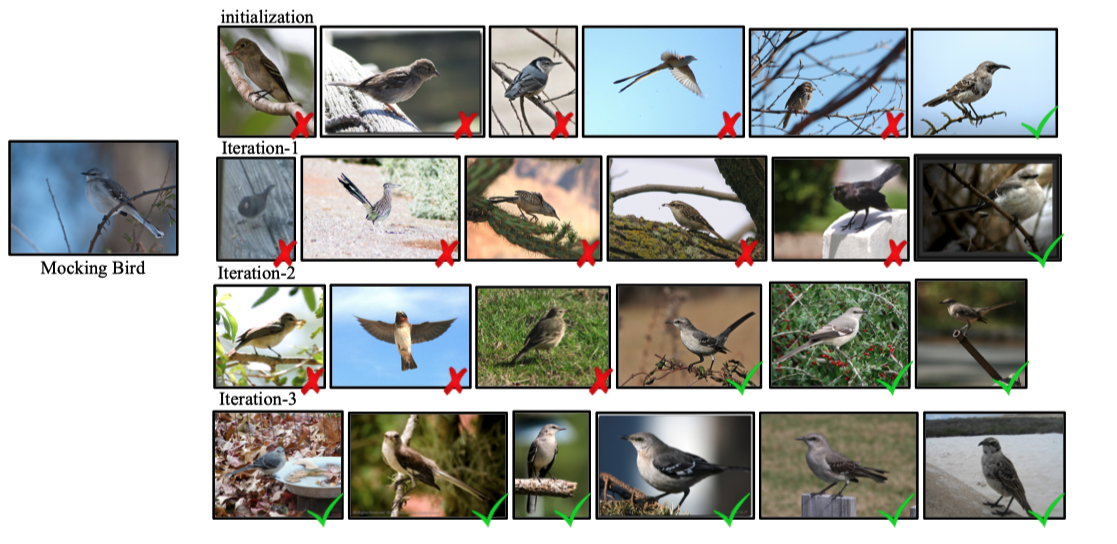

At initialization, the trained model confuses a mocking bird with sooty albatross, grasshopper sparrow, gray catbird, least flycatcher, yellow billed cuckoo, etc. We get rid of false-positives with every iteration. With more iterations, the false positives become fewer.

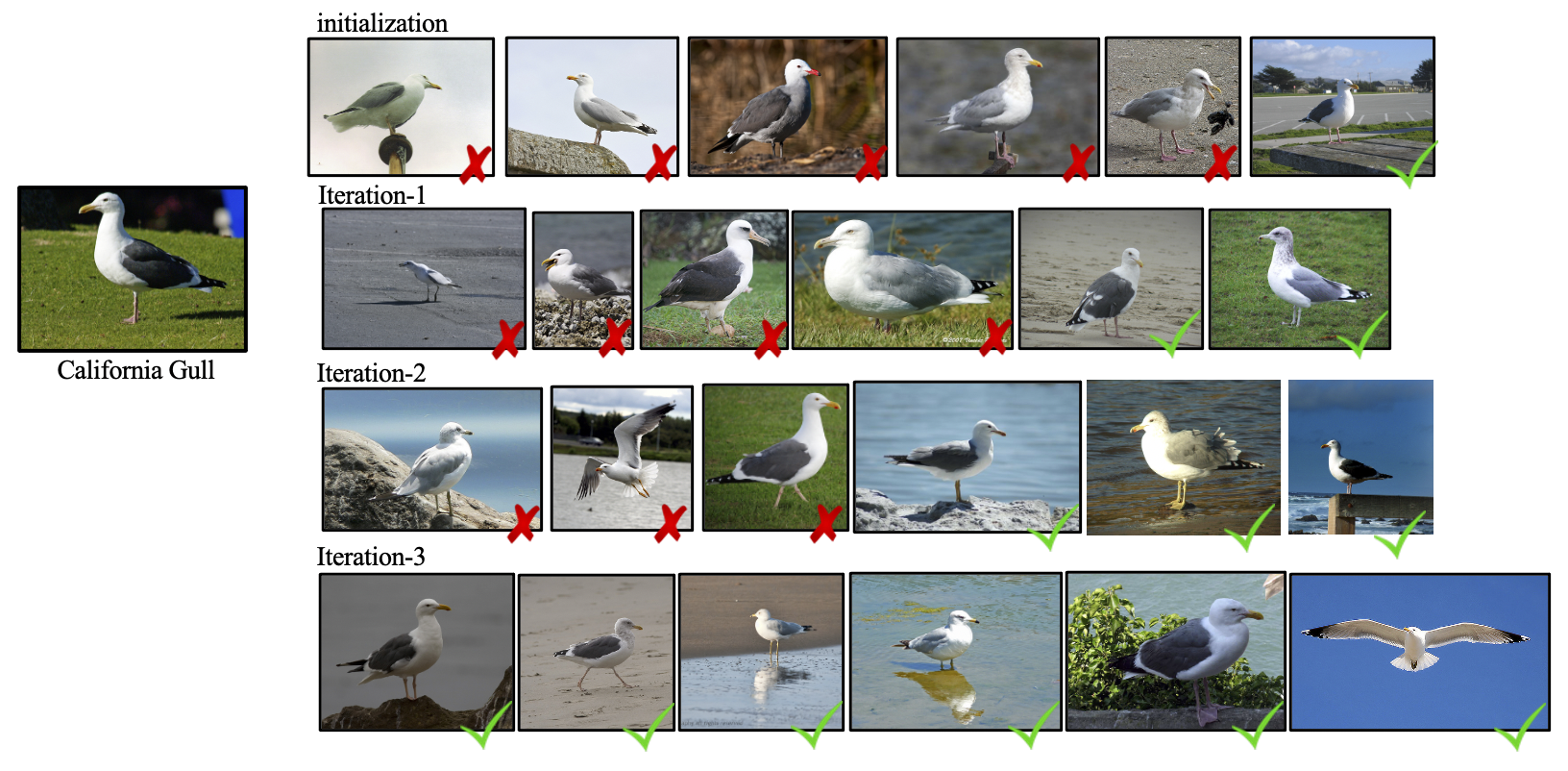

At initialization, the trained model confuses california gull with western gull, herring gull, ring billed gull, etc. We get rid of false-positives with every iteration. With more iterations, the false positives become fewer.

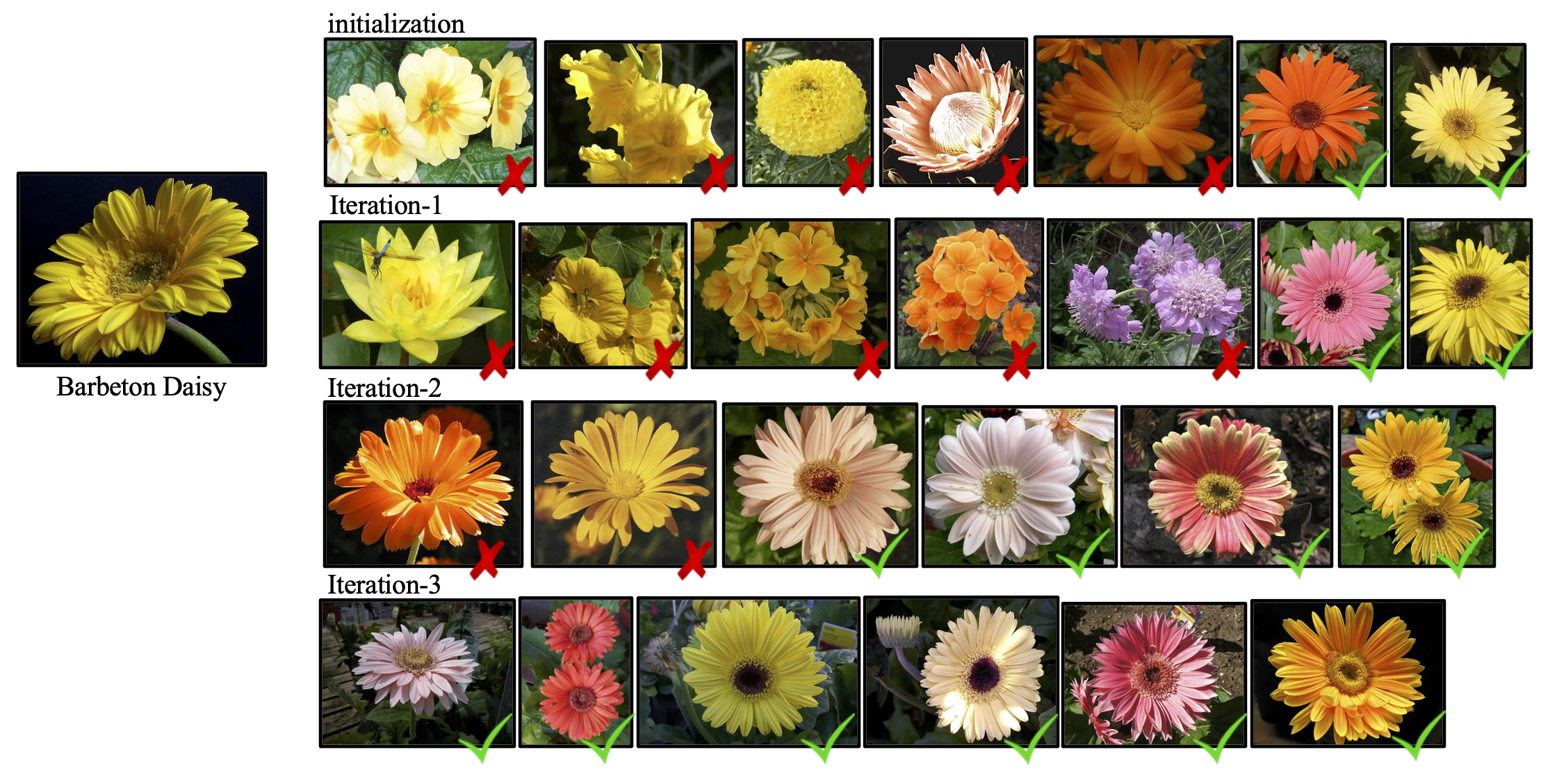

At initialization, the trained model confuses barbeton daisy with primula, water lily, daffodil, sweet william, etc. With more iterations, the false positives become fewer.

At initialization, the trained model confuses bromelia with snapdragon, rose, water lily, lotus, wallflower, etc. With more iterations, the false positives become fewer.

At initialization, the trained model confuses fire lily with tiger lily, bird of paradise, peruvian lily, canna lily, foxglove, etc. With more iterations, the false positives become fewer.

At initialization, the trained model confuses garden phlox with sword lily, sweet william, petunia, cape flower, etc. With more iterations, the false positives become fewer.

At initialization, the trained model confuses common rose with red ginger, water lily, english marigold, anthurium, king protea, and etc. With more iterations, the false positives become fewer.

At initialization, the trained model confuses silverbush with giant white arum lily, thorn apple, frangipani, fritillary, windflower, etc. With more iterations, the false positives become fewer.

Acknowledgements

This work was supported by the CMU Argo AI Center for Autonomous Vehicle Research.